Optimizing XML Sitemaps for SEO

https://ohgm.co.ukA great read from Oliver H.G. Mason on his findings on splitting XML sitemaps into smaller chunks. Instead of a few XML Sitemaps capped at 50,000 URLs the magic number Google recommends, he decided to go with many XML Sitemaps containing 10,000 URLs.

Some SEOs recommend 1,000 URL Sitemaps so they can get around Search Console limits so it can be used to monitor which URLs aren’t getting indexed.

He then talks about dynamically populating XML Sitemaps based on what Googlebot is crawling. He out’s the process a follows

- You have a list of URLs you want Googlebot to crawl.

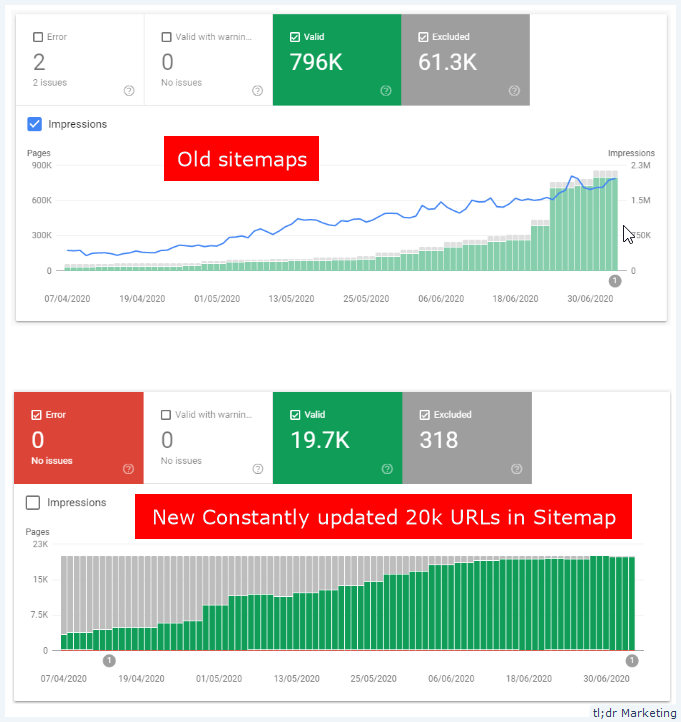

- You generate an XML sitemap based on a restricted slice of the most recent of these URLs (in this example, the top 20,000).

- You monitor your access logs for Googlebot requests.

- Whenever Googlebot makes a request to one of the URLs you are monitoring, the URL is removed from your list. This removes it from /uncrawled.xml

- This is appended to the appropriate long-term XML Sitemap (e.g. /posts-sitemap-45.xml). This step is optional.

He also talks about how you can dynamically control your internal linking in response to the URLs Googlebot is crawling to further improve Google’s crawl of your content.